|

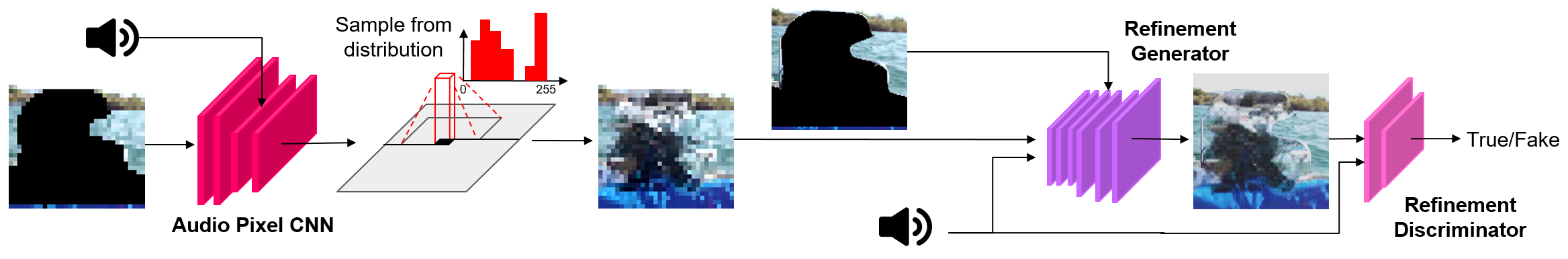

| Fig 1. Imagine a scene where originally there was a plane flying in the sky and later the plane is removed. Probably, when we will inpaint it, it will be completed with a visually consistent texture, but we will miss the plane and the original semantic content in the scene. Clearly, without any additional information, the plane cannot be re- covered. Here, for the first time, we tackle a multi-modal instance of the classical inpainting problem: given a missing image region and an audio sample, the inpainted region should comply with the semantic content of the sound as well as with the surrounding visual appearance. |