DRANet

Dual Reverse Attention Network for Person Re-IDentification

15 June 2021

Authors: Shuangwei Liu; Lin Qi; Yunzhou Zhang; Weidong ShiURL: https://ieeexplore.ieee.org/document/8804419

Comments: 2019 IEEE International Conference on Image Processing (ICIP)

Categories: attention

What ?

Person Re-ID using a dual reverse attention based module. Creates hard examples by transforming features using channel and spatial reverse attention process.

Why ?

Inability to generalise on hard examples(occlusion, light variances etc.). Mining / synthetic data generation is expensive and suffers on convergence problem.

How ?

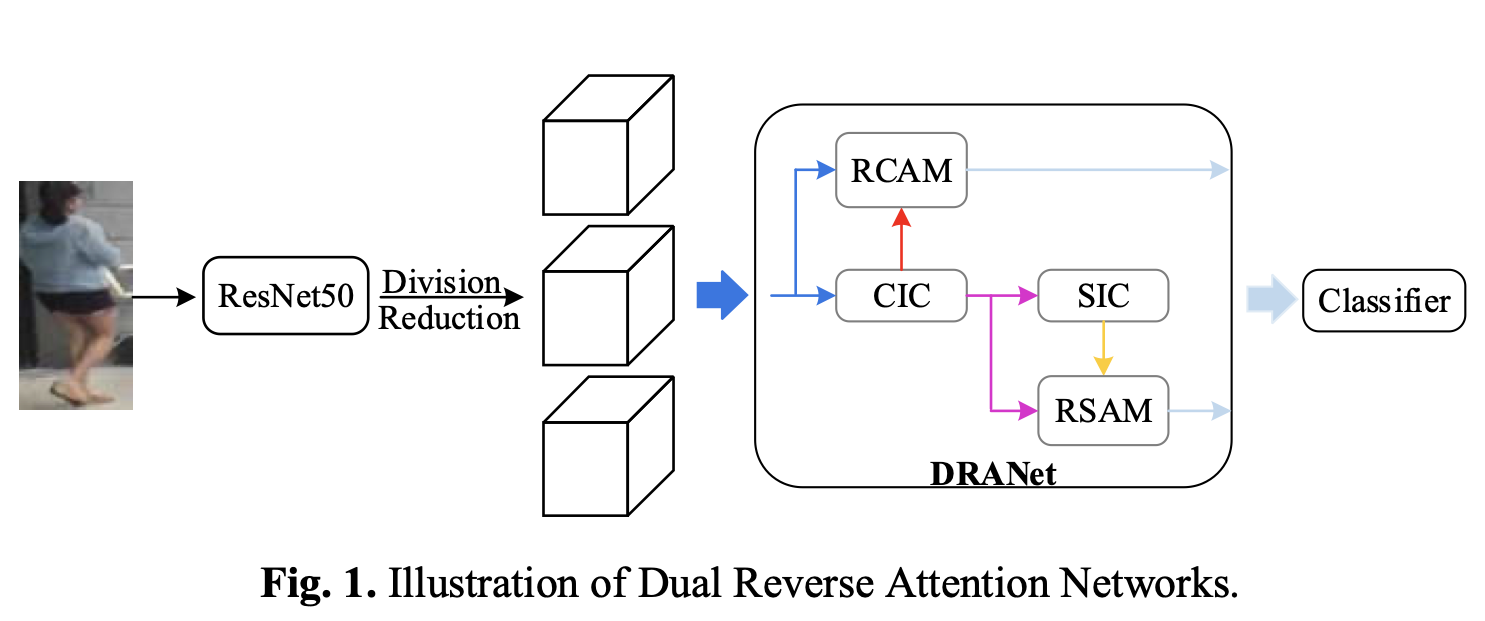

The authors introduce DRANet (Dual Reverse Attention Networks) to generate hard examples in convolutional feature space.

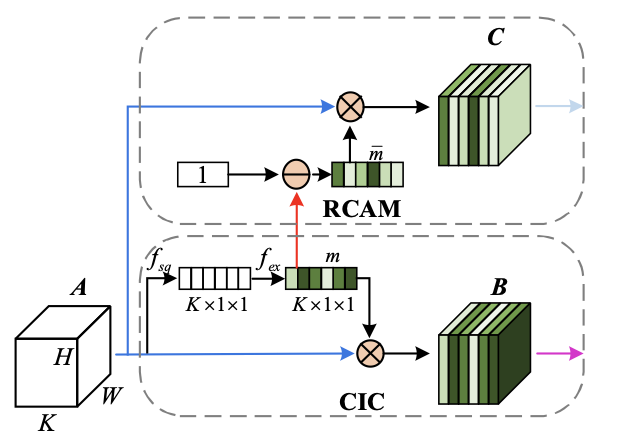

The input image is fed to ResNet50 and features maps are divided into three parts (Why ?). Each partial feature sequentially goes through CIC (Channel Information Capture) and SIC (Spatial Information Capture).

Dual Reverse Attention

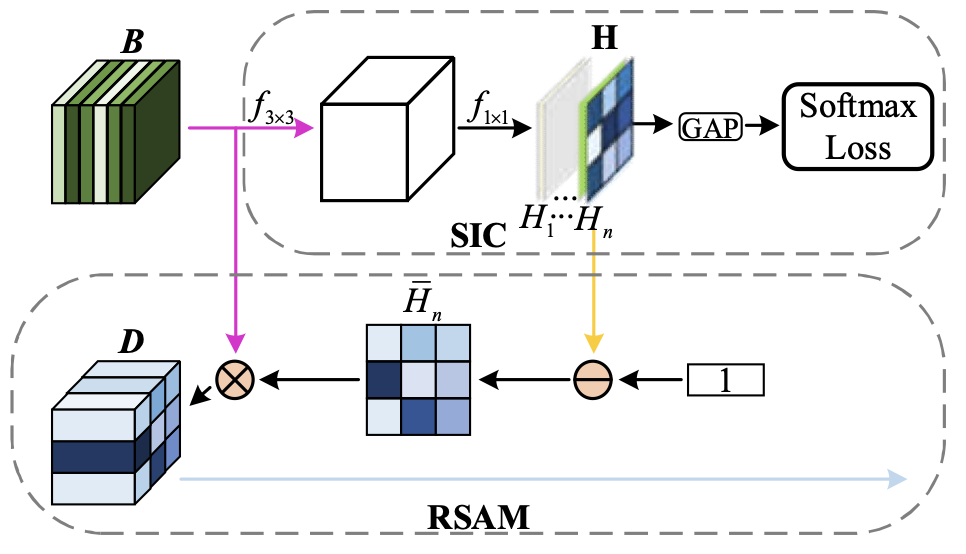

CIC provides a mask ‘m’ to suppress informative features and generate channel based hard examples. Similarly, SIC provides a mask, but ‘only for’ corresponding person identity ‘n’ (Only available during training).

Implementation

All three softmax losses are combined during training process. During testing, only B and SIC is possible (since person identity is available during training only for spatial reverse attention). Encodings from both features maps after GAP are concatenated (2048 - 1024*2) for predictions.

Notes :

The model improves mAP on three dataset Market-150, DukeMTMC-reID, CUHK03 by 2, 3 & 5 %. But performs almost similar for rank 1, 5, and 10 accuracy. For details check out the original paper DRANET.

Comments

No comments found for this article.

Join the discussion for this article on this ticket. Comments appear on this page instantly.