Integrate Gaze Attention

Integrating Human Gaze into Attention for Egocentric Activity Recognition

23 June 2021

Authors: Kyle Min, Jason J. CorsoURL: https://openaccess.thecvf.com/content/WACV2021/html/Min_Integrating_Human_Gaze_Into_Attention_for_Egocentric_Activity_Recognition_WACV_2021_paper.html

Comments: 2021 Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

Categories: attention, action-recognition, egocentric

What ?

Direct optimisation and sampling technique for better integrating gaze for FPV action recognition on EGTEA dataset.

Why ?

Improvement to the paper In The Eyes of Beholder.

Gumble-Softmax sampling used, introduces a significant bias to a gradient estimator, limiting its performance. Also a direct integration of sampled gaze map can cause confusion due to inaccurate gaze measurements.

How ?

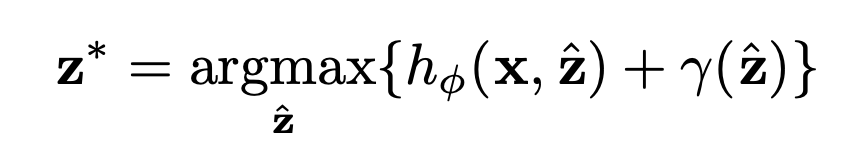

The authors use the same loss function as that of their competitors. Instead of curating an attention grid, they sample the gaze points through Gumble-Max trick.

Gamma is the random samples from a Gumble distribution.

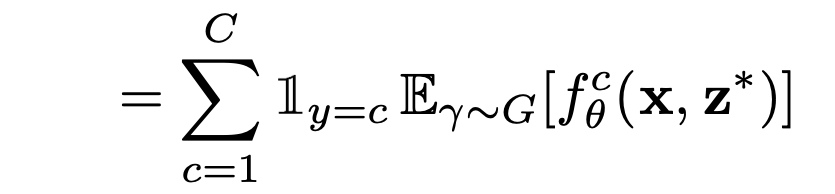

Now, they propose to formulate log-likelihood using the class-wise log-probabilities as a function of x, image features and z, gaze sampled.

: log(pθ)

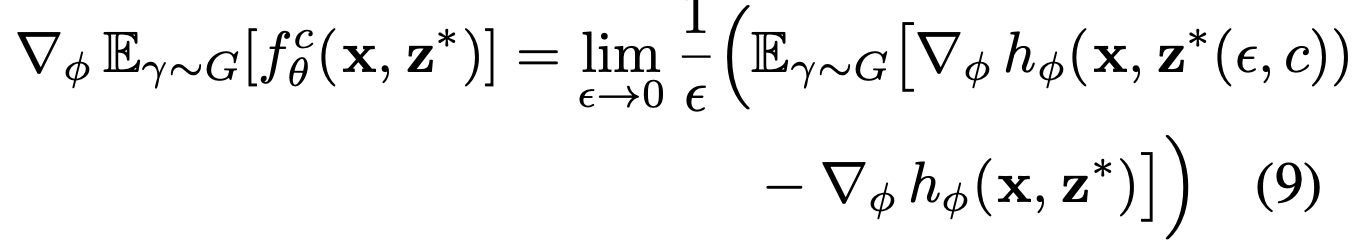

The gradient will then be a sum of multiple expectation terms of the class- wise log-probabilities, each multiplied by an indicator function, where each class gradient estimator is computed using direct optimisation.

where,

: hφ(x, z) = log qφ(z|x)

The unbiased parameter is introduced using a perturbation parameter, which is set to high initially and then progressively lowered.

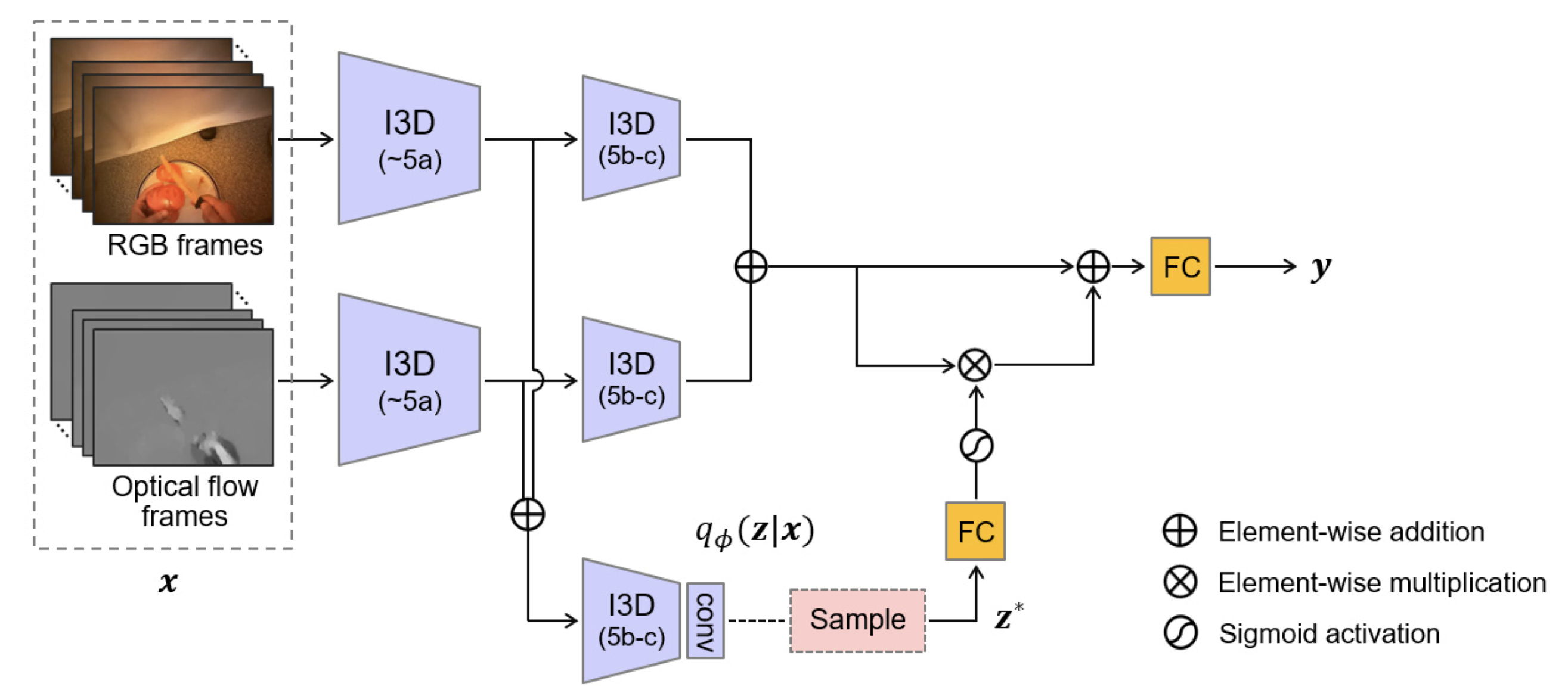

The sampled gaze distribution is fed to a FC layer followed a sigmoid to act as a soft-attention map.

Notes :

Structured gaze modelling with direct optimisation performs better than other reparameterization technique such as Gumble-Softmax.

Comments

No comments found for this article.

Join the discussion for this article on this ticket. Comments appear on this page instantly.